Combining a form-fitting fabric and AI/ML, MIT researchers created a smart textile that recognizes a user’s motion—walking, running, and jumping.

Researchers and startups have identified smart textiles—a sub-field of the booming wearables industry—as a technology that may move the needle in a number of settings—sports, healthcare, factories, and beyond. But smart textiles still face two prevailing challenges: reliability and manufacturability.

Last week, researchers at MIT published a paper in which they describe a new technique to boost the performance of smart textiles, allowing the technology to accurately predict what a user is doing based on his or her movements. This research presents a new way to overcome pressure-sensing limitations that have previously stymied smart textile adoption.

MIT has devised a novel fabrication technique that allows e-textiles to sense how a wearer is moving. Image used courtesy of Irmandy Wicaksono/MIT

The Trouble With Resistive-based Pressure Sensing

The team at MIT first turned their attention to resistive-based pressure sensing, one of the most common applications of smart textiles.

Example of a resistive-based textile pressure sensor used by Nextiles. Image used courtesy of Nextiles

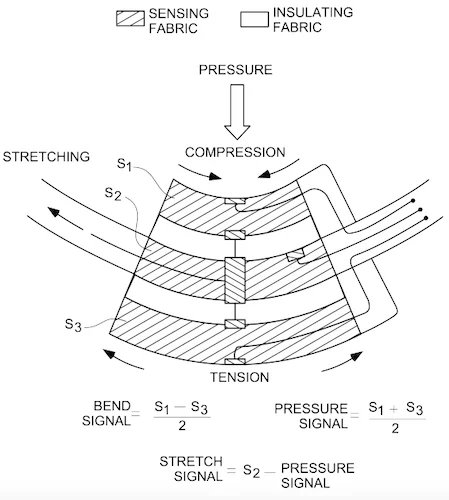

In smart textiles, resistive pressure sensing works by leveraging a piezo-resistive element created by a conducting yarn. The sensor structure itself is often a 2D matrix of standard and conductive yarns, spun in a way that sandwiches a piezo-resistive knit around two layers of conductive yarn. A key feature of the piezo-resistive knit is that it changes its resistance based on the applied force, allowing pressure sensing within the fabric.

One (literally) pressing challenge to this method is that yarn is soft and pliable, causing the layers to move around and rub up against each other in an unwanted way. The result of this movement and interaction is noise in the system, ultimately limiting the accuracy, repeatability, and reliability of resistive-based textile pressure sensors.

MIT Researchers Unveil “3DKnITS”

In a new study led by MIT’s Media Lab, researchers presented a solution to these problematic yarn movements.

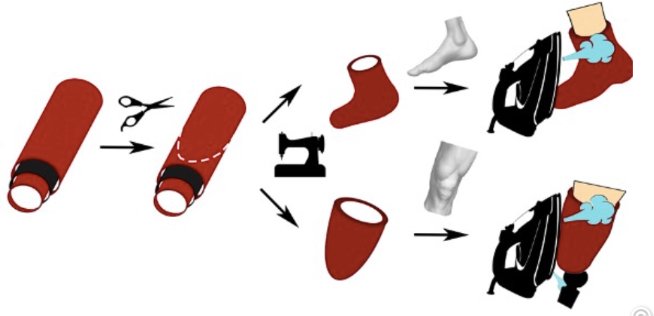

The team presented a manufacturing method dubbed “thermoforming.” Using this method, thermoplastic yarns are melted at relatively low temperatures to form a pliable textile. The material not only becomes a better fit for the user but also hardens slightly, preventing unwanted rubbing and interaction between fibers.

The fabrication process for 3DKnITS. Image used courtesy of Irmandy Wicaksono/MIT

To put thermoforming in action, the researchers created a tubular knit textile with a digital circular knitting machine—combining polyester, spandex, conductive, and TPU yarns in the knitting process. The tube was then cut into the shape of a certain body part and finally melted at low temperatures to conform better to the wearer. This final melting process yielded a more accurate sensor (because of the closer fit) while also removing the deleterious impact of noise in the fibers.

Deep Learning Makes Smart Textiles Even Smarter

The researchers did not stop at thermoforming, however. They then designed a deep learning-based algorithm to interpret the pressure data from the sensor to determine what activities the wearer was performing. The resulting system is called 3DKnITS.

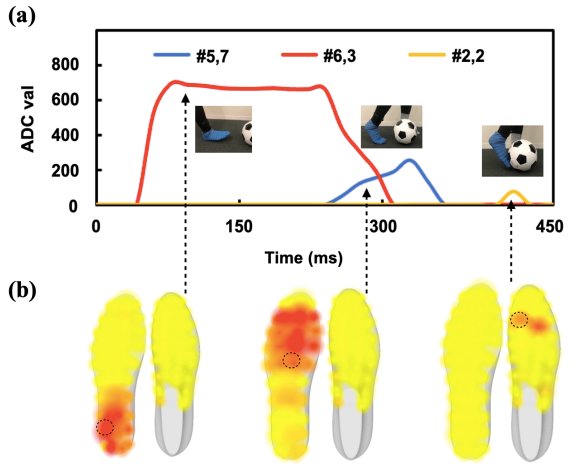

The researchers tested the spatiotemporal 2D pressure sensor data as a heat map, where the 2D matrix of the fabric was treated as a grid. They then used a specially-designed readout circuit that scanned the rows and columns of the textile and measured resistance at each point. Using this circuit, the researchers created a granular heat map of the pressure sensor and fed it to the machine learning algorithm.

Applied as a liner in a shoe, the system can detect gait, biomechanics, and soccer movements. Image used courtesy of Wicaksono et al.

By treating the data as a heat map, the researchers could interpret the data as if it were an image, simplifying the machine-learning aspect of the project. The MIT team says this technique allowed them to develop a personalized convolutional neural network (CNN) that recognized real-time activity and posture based on a user’s interaction with the textile surface.

The team also reported that their smart textile is highly accurate. According to the published research, the whole system, including a thermoformed sock and the CNN, was able to classify a number of basic activities and yoga poses in real-time with 99.6% and 98.7% accuracy, respectively.